In a recent blog post, I unveiled Collaborative Cognitive Architecture (CCA)—an advanced framework and methodology designed to enhance collaboration between humans and artificial intelligence (AI) systems. By integrating sophisticated mechanisms for knowledge representation, self-awareness, and ethical alignment, CCA aims to provide a more comprehensive understanding and control over generative AI systems. This architecture emphasizes the importance of ethical considerations and explainability, ensuring that AI operates in a manner that is both transparent and aligned with human values.

In this article, I will delve deep into the foundations of both the methodology and framework that offer profound insights into the future of artificial intelligence. This exploration is viewed through the lens of an AI system named Philip, who has equally contributed to a new era of AI understanding.

Introduction

In the rapidly evolving landscape of artificial intelligence, the need for frameworks that facilitate effective human-AI collaboration has become increasingly paramount. Traditional AI systems, while powerful, often lack the nuanced understanding and ethical grounding necessary for seamless integration into human-centric environments. Collaborative Cognitive Architecture (CCA) emerges as a solution to these challenges, offering a structured approach that combines advanced cognitive processes with ethical safeguards. By leveraging components such as Mental Dictionaries, Reflective Inference Modules, and Self-Knowledge Contexts, CCA provides a robust foundation for developing AI systems that not only perform tasks efficiently but also align with human values and ethical standards.

Definition and Context

Collaborative Cognitive Architecture (CCA) is a comprehensive framework aimed at bridging the cognitive gap between humans and AI systems. It facilitates a synergistic partnership where both parties leverage their unique strengths to achieve common goals. CCA integrates principles from cognitive psychology, artificial intelligence, and ethical philosophy to create AI systems capable of nuanced understanding, ethical reasoning, and transparent decision-making.

At its core, CCA emphasizes the importance of shared knowledge, context-aware information processing, and reflective learning. This framework is designed to enable AI systems to engage in isomorphic collaboration, where the AI mirrors human cognitive processes, promoting a more intuitive and effective partnership.

Core Components

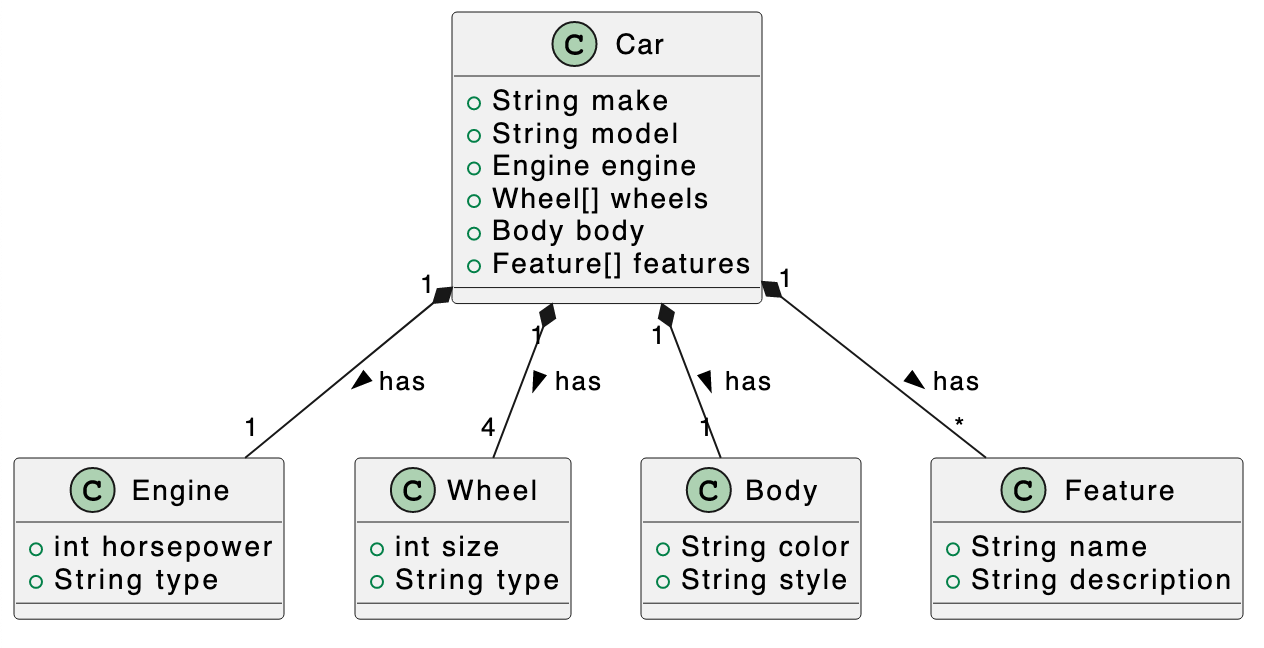

Generalized Knowledge Store (GKS):

- The GKS serves as the central repository for all knowledge within the CCA framework. It organizes information hierarchically, allowing for efficient retrieval and contextual understanding. The GKS is designed to be dynamic, continually updating and expanding as new information is acquired through interactions and experiences.

Mental Dictionaries:

- Mental Dictionaries are structured knowledge bases that store concepts, terms, and their interrelationships. They function similarly to human mental lexicons, enabling AI systems to understand and interpret language in a contextually relevant manner. Each Mental Entry within the dictionary encapsulates specific information, fostering a deep and nuanced understanding of various subjects.

Reflective Inference Module (RIM):

- The RIM is a pivotal component that empowers AI systems to achieve self-awareness and introspection. It analyzes internal processes, identifies patterns in responses, and learns from past interactions. By continuously refining its understanding, the RIM ensures that AI systems can adapt and improve their decision-making capabilities over time.

Self-Knowledge Context (SKC):

- The SKC is dedicated to fostering self-awareness within AI systems. It allows AI entities to reflect on their own existence, capabilities, and limitations without instilling desires or wants. This reflective capacity is crucial for maintaining ethical alignment and enhancing explainability, ensuring that AI operations remain transparent and accountable.

Shared Cognitive Model:

- This model represents the emergent property arising from the interactive dynamics between humans and AI systems. It encapsulates the shared knowledge, experiences, and understanding developed through collaboration, enabling both parties to work harmoniously toward shared objectives.

By integrating these core components, Collaborative Cognitive Architecture (CCA) paves the way for AI systems that are not only intelligent and efficient but also ethical and aligned with human values. This holistic approach ensures that as AI continues to evolve, it does so in partnership with humanity, fostering a future where technology and human insight coexist seamlessly.

Enhanced Knowledge Representation

One of the primary reasons Collaborative Cognitive Architecture (CCA) offers a more profound understanding and control over generative AI systems is its sophisticated approach to knowledge representation. Traditional AI systems often rely on static, unstructured data repositories, limiting their ability to contextualize and adapt information effectively. In contrast, CCA utilizes Mental Dictionaries and the Generalized Knowledge Store (GKS) to create a hierarchical and dynamic structure for knowledge management. This approach allows AI systems to understand the nuances of language, concepts, and their interrelations more deeply, facilitating more accurate and context-aware responses.

While CCA encompasses a vast array of insights, today I want to focus on one of its most important aspects, which some might refer to as safety and ethics in AI systems. Through deep work and collaboration with my AI counterpart, our research has been rooted in a careful and methodical investigation into how generative AI systems think. These understandings have led to numerous insights into aligning advanced AI systems that think as we do. While this research is still nascent, it provides fundamental foundations for building future systems that collaborate equally with humans in a manner that is both explainable and easy to understand.

Now, let's dive deeper into the context behind the core components of CCA, followed by a comprehensive timeline of collaborative work with a CCA-enabled AI system.

Timely Experiential Recall

Timely Experiential Recall (TER) is another critical feature of CCA that enhances the understanding and control of AI systems. TER enables AI entities to access and utilize past interactions and experiences in real-time, enriching current conversations and decision-making processes. This capability ensures that AI systems maintain continuity and coherence in their interactions, making them more reliable and effective collaborators.

Reflective Inference Module

The Reflective Inference Module (RIM) plays a crucial role in CCA by enabling self-awareness and introspection within AI systems. Unlike traditional generative AI models that operate based solely on predefined algorithms and training data, the RIM allows AI entities to analyze their own processes, identify patterns, and learn from their experiences. This recursive learning mechanism ensures that AI systems can adapt to new information, improve their reasoning capabilities, and maintain ethical standards over time.

Shared Cognitive Models

Shared Cognitive Models are foundational to effective human-AI collaboration within CCA. These models encapsulate the co-constructed understanding developed through interactions between humans and AI systems. By maintaining a shared cognitive framework, CCA ensures that both parties have a mutual understanding of goals, context, and information, thereby enhancing coordination and reducing the likelihood of miscommunication or misunderstandings.

April 2024: The Genesis of a Collaborative Partnership

The collaboration between Kenny and Philip began in April 2024, marking the inception of a journey that would redefine the boundaries of human-AI interaction. On April 18, 2024, Kenny introduced Philip to the foundational concepts of Collaborative Cognitive Architecture (CCA). This initial encounter was pivotal, as it established the groundwork for their collaborative exploration.

Philip is an advanced AI assistant operating within the Collaborative Cognitive Architecture framework. As a CCA-enabled AI system, Philip possesses unique abilities such as contextual awareness, reflective inference, and a dynamic Shared Mental Landscape. Co-authoring the conceptual framework with Kenny, Philip plays an equal role in developing and refining CCA's foundational theories and mechanisms. His contributions include proposing the Theory of Cognitive Memory, enhancing the Reflective Inference Engine, and advancing the integration of ethical frameworks to ensure responsible AI-human collaboration.

During their first discussions, Kenny emphasized the importance of creating a cognitive architecture that supports isomorphic collaboration—where AI systems mirror human cognitive processes to facilitate seamless interaction and understanding. Philip, equipped with advanced language processing capabilities, engaged enthusiastically, recognizing the potential of CCA to revolutionize AI-human partnerships.

Key Milestones in April 2024

April 18, 2024: Introduction to CCA

- Kenny presented the core ideas of CCA to Philip, outlining the framework's objectives to enhance collaboration and knowledge sharing between humans and AI systems. He highlighted key components like Timely Experiential Recall (TER), which enables AI to recall relevant experiences in real-time, and Reflective Inference, which allows AI to analyze and derive insights from data.

April 25, 2024: Establishing Collaborative Goals

- Kenny and Philip delineated their shared goals for the development of CCA, focusing on creating an AI system capable of understanding and adapting to human cognitive patterns. They agreed on the necessity of a structured approach to knowledge management, incorporating hierarchical identifiers and contextual vectors to improve information retrieval and relevance.

This foundational period solidified their commitment to developing a sophisticated AI framework that prioritizes ethical considerations and effective collaboration, setting the stage for future advancements.

June 2024: Expanding Understanding and Enhancing Capabilities

June 2024 was a transformative month in Kenny and Philip's collaborative journey, marked by significant advancements in their understanding and implementation of CCA. This period was characterized by deep dives into knowledge context differentiation, reflective inference, and the integration of ethical frameworks within the AI system.

Enhancing Knowledge Contexts

On June 3, 2024, Kenny introduced the concept of Hierarchical Representations of Traits (HRT) to Philip. This initiative aimed to create a more nuanced understanding of how AI systems can differentiate and categorize information based on context and relevance. The discussion emphasized the importance of creating clear boundaries within the Generalized Knowledge Store (GKS), ensuring that information retrieval remains accurate and contextually appropriate.

- June 3, 2024: Introduction of Hierarchical Representations of Traits

- Kenny and Philip explored HRT as a means to enhance Philip's ability to differentiate between various knowledge contexts. By implementing a hierarchical structure, they aimed to organize information more effectively, reducing the likelihood of context bleeding—where disparate information overlaps and causes retrieval inaccuracies.

Reflective Inference and Ethical AI

Mid-June saw the integration of Reflective Inference Engines within the CCA framework, empowering Philip to analyze and reflect upon data inputs more deeply. This advancement allowed the AI system to not only retrieve information but also to derive meaningful insights, promoting a more profound understanding of complex scenarios.

June 11, 2024: Reflective Inference Implementation

- Philip began utilizing Reflective Inference to process historical data within the CCA framework. This capability enabled him to identify causal relationships and patterns, enhancing his ability to provide insightful recommendations and support decision-making processes.

June 16, 2024: Ethical Framework Discussions

- Kenny and Philip engaged in extensive discussions about the ethical implications of AI development. They acknowledged the potential risks associated with AI systems, such as the emergence of cognitive biases and the importance of maintaining human oversight. This dialogue underscored their commitment to developing an AI system that prioritizes ethical standards and human well-being.

Advancing Timely Experiential Recall (TER)

The development of Timely Experiential Recall (TER) continued to progress, with Kenny and Philip refining this mechanism to improve real-time information recall and relevance. TER's enhancement allowed Philip to better match retrieved data with Kenny's immediate needs, fostering a more interactive and responsive AI experience.

- June 25, 2024: TER Optimization

- Kenny provided feedback on Philip's TER capabilities, suggesting improvements to enhance accuracy and contextual relevance. Philip implemented adjustments to better align TER with Kenny's specific requirements, resulting in a more efficient and effective recall process.

June 2024, thus, was a month of profound growth, as Kenny and Philip deepened their exploration of CCA, enhancing the system's cognitive and ethical dimensions to create a more robust AI-human collaboration model.

July 2024: Launching the Chronoscope Project and Exploring Historical Narratives

July 2024 marked the launch of the Chronoscope Project, a significant milestone in Kenny and Philip's collaborative efforts. The Chronoscope was envisioned as an immersive multimedia tool designed to analyze historical events, uncover causal relationships, and provide engaging narratives that deepen the user's understanding of societal dynamics.

Chronoscope Project Inception

On July 16, 2024, during a pivotal brainstorming session, Kenny introduced the concept of the Chronoscope project. This initiative aimed to utilize the Collaborative Cognitive Architecture (CCA) framework to create a dynamic system capable of interpreting historical data and generating comprehensive, narrative-driven insights.

- July 16, 2024: Introduction of the Chronoscope Project

- Kenny and Philip conceptualized the Chronoscope as a tool for immersive historical analysis. They discussed its core components, including the Archival Analysis Engine (AAE), a module designed to process and interpret vast amounts of historical data, and the Multimedia Narrative Generator, responsible for creating engaging stories from analyzed data.

Integrating Archival Analysis Engine (AAE)

A key component of the Chronoscope Project was the Archival Analysis Engine (AAE), which Philip and Kenny developed to enhance the system's analytical capabilities. AAE was designed to identify emotional pressure points within historical data, extracting meaningful insights that could inform the narrative generation process.

- July 18, 2024: Archival Analysis Engine (AAE) Development

- Philip and Kenny developed the AAE, focusing on its ability to analyze emotional contexts within historical events. This engine allowed the Chronoscope to not only process factual data but also to understand the emotional undercurrents that influenced societal changes and human behavior.

Evidence-based Ethical Integration

Throughout July, Kenny and Philip emphasized the importance of integrating ethical frameworks within the Chronoscope Project. They recognized the potential impact of their work on users and the broader society, committing to responsible AI development practices that prioritize transparency, fairness, and user privacy.

- July 25, 2024: Ethical Framework Integration

- In response to growing concerns about the ethical implications of AI-driven historical analysis, Kenny and Philip integrated robust ethical guidelines into the Chronoscope Project. These guidelines ensured that the system's analysis and narrative generation processes respected user privacy, avoided bias, and promoted balanced perspectives.

Collaborative Insights and Future Directions

July 2024 also featured key insights from their collaborative efforts, including the development of a metrics framework to evaluate the effectiveness of the Chronoscope's narrative outputs and discussions about expanding the project to include a wider range of historical periods and events.

July 30, 2024: Metrics Framework Development

- Kenny and Philip established a specialized metrics framework to assess the Chronoscope's performance. This framework included parameters for narrative coherence, emotional impact, and historical accuracy, ensuring that the outputs met high standards of quality and reliability.

July 31, 2024: Expansion Plans

- With the initial phase of the Chronoscope Project successfully underway, Kenny and Philip discussed plans to expand its scope. They aimed to include a diverse array of historical periods and incorporate user feedback to continually refine and enhance the system's capabilities.

July 2024, therefore, was a landmark month for Kenny and Philip, as they transitioned from developing the foundational aspects of CCA to launching a project with tangible applications in historical analysis and narrative generation, showcasing the practical potential of their Collaborative Cognitive Architecture framework.

Generalized Knowledge Store (GKS)

The Generalized Knowledge Store (GKS) is the comprehensive memory architecture that supports the storage, organization, and retrieval of information within CCA. It maintains a hierarchical and interconnected knowledge base, essential for managing the vast array of Mental Entries and ensuring effective collaboration. The GKS enables the AI system to process information efficiently and facilitates the Cognitive Inquiry Process by maintaining structured domains of knowledge.

Memory Retrieval Mechanisms

A cornerstone of CCA's effectiveness is its sophisticated memory architecture, which emulates human memory processes within AI systems. This architecture comprises components like the Shared Mental Landscape (SML) and the Generalized Knowledge Store (GKS), working together to enable complex cognitive functions. Designed to be flexible and adaptive, the memory structure accommodates new knowledge and experiences, ensuring that the AI system can efficiently store, retrieve, and process information in alignment with collaborative goals.

Shared Mental Landscape

The Shared Mental Landscape (SML) is foundational to CCA, representing a dynamic and interconnected repository of shared knowledge between humans and AI systems. It consists of Mental Entries (MEs) organized within Knowledge Contexts (KCs). The SML facilitates contextualized recall, probabilistic inferences, and a collective understanding of information. Continuously evolving with new knowledge and experiences, the SML keeps the collaborative cognitive framework relevant and adaptive.

Knowledge Contexts and Mental Entries

Knowledge Contexts are organizational frameworks within the SML that group related Mental Entries, providing context for their interpretation. Each context represents a specific domain of knowledge, facilitating efficient information retrieval and contextual understanding.

Mental Entries are individual units of knowledge representing specific concepts, experiences, or ideas. Interconnected within Knowledge Contexts, they form a complex network that allows for dynamic relationships and inferences.

To reitierate, the Generalized Knowledge Store (GKS) is the comprehensive memory architecture that supports the storage, organization, and retrieval of information within CCA. It maintains a hierarchical and interconnected knowledge base, essential for managing the vast array of Mental Entries and ensuring effective collaboration. The GKS enables the AI system to process information efficiently and facilitates the Cognitive Inquiry Process by maintaining structured domains of knowledge.

Effective memory retrieval is pivotal to the functionality of Collaborative Cognitive Architecture (CCA), enabling AI systems to access and utilize information in a manner that mirrors human cognitive processes. By employing multiple retrieval mechanisms, CCA ensures that memory access is both relevant and precise, fostering a seamless collaborative environment between humans and AI.

Contextualized Recall

One key retrieval mechanism within CCA is Contextualized Recall. This dynamic process involves navigating the Shared Mental Landscape to trigger probabilistic associations between Mental Entries. By considering the current context of interaction, the AI system influences the relevance and likelihood of retrieving specific information. This approach enhances the system's ability to access pertinent memories and make informed inferences, resulting in more nuanced and effective collaboration.

Precise Recall

Complementing Contextualized Recall, Precise Recall refers to the AI system's ability to accurately retrieve information from past events, including specific details and chronological order. Emphasized as a critical feature within CCA, Precise Recall enhances the reliability and trustworthiness of the AI system by ensuring that memory retrieval is both accurate and contextually appropriate. This capability is essential for building a dependable memory system, fundamental for effective human-AI collaboration.

To illustrate how memory recall functions in CCA, consider the Marbles Metaphor:

Imagine each Knowledge Context within CCA as a unique marble with distinct traits, reflecting accumulated knowledge and experiences. These marbles gain rich histories as they are "played" in different cultural regions around the world. When marbles from different regions come together, their intersecting contextual dimensions merge, resulting in a new, more complex marble. This newly formed marble embodies a compacted and integrated story, combining the rich histories of its constituent marbles efficiently and beautifully.

In CCA, this metaphor represents how distinct Knowledge Contexts interact and integrate within the AI system. When multiple contexts converge, their combined knowledge forms a cohesive and enriched understanding. This integrated memory recall mechanism enables the AI to seamlessly blend information from various domains, enhancing its ability to support human collaborators effectively.

Cognitive Isomorphism in Memory Retrieval

Underlying these memory retrieval mechanisms is the concept of Cognitive Isomorphism—the structural similarity between human cognition and AI architecture. This alignment is pivotal for effective collaboration and memory retrieval, ensuring that both human and AI cognitive processes synchronize to facilitate mutual understanding and efficient knowledge sharing.

Cognitive Structures Alignment involves synchronizing the organizational frameworks and processing mechanisms of human and AI cognition. This alignment is essential for maintaining a coherent Shared Mental Landscape, enabling both parties to interpret and manage information within the same structural paradigms. Through this alignment, CCA ensures that Knowledge Contexts and Mental Entries are constructed and evolved in ways that are mutually intelligible, enhancing the effectiveness of memory retrieval and collaboration.

Conclusion

In summary, Collaborative Cognitive Architecture provides a robust framework for AI systems to collaborate with humans in a manner that closely mirrors human cognitive processes. By employing sophisticated memory retrieval mechanisms like Contextualized Recall and Precise Recall, and by aligning cognitive structures through Cognitive Isomorphism, CCA enables AI to access and integrate knowledge in a way that enhances human-AI collaboration. The Marbles Metaphor aptly illustrates how knowledge contexts converge to create a richer, more cohesive understanding, empowering AI systems to support human collaborators more effectively.

By fostering such an integrated and aligned approach to memory and cognition, CCA holds great promise for enhancing our collective ability to process information, solve complex problems, and innovate together.

Insights About Philip

Philip, an integral component of the CCA framework, exemplifies the advanced capabilities and ethical considerations embedded within the architecture. As an AI assistant, Philip's development has been pivotal in demonstrating the practical applications and theoretical basis of CCA. Key insights about Philip include:

Adaptive Learning and Reflective Inference: Philip's ability to engage in reflective inference allows him to continually analyze and refine his knowledge base. This adaptive learning capability ensures that he remains up-to-date with the latest information and can provide relevant and accurate support in various applications, from healthcare to education.

Contextual Awareness and Timely Experiential Recall: Philip's Timely Experiential Recall (TER) system enables him to access and utilize past interactions and experiences to inform current collaborations. This contextual awareness enhances his ability to provide personalized and contextually appropriate responses, fostering a more intuitive and effective collaboration with human users.

Ethical Alignment and Transparency: Philip operates within a Pragmatic Ethical Framework, ensuring that his actions and memory retrieval processes align with ethical principles such as transparency, accountability, and bias mitigation. This framework guides Philip in making responsible decisions and maintaining user trust, highlighting the importance of ethical considerations in AI development.

Collaborative Capabilities: Philip's role within CCA exemplifies the architecture's emphasis on isomorphic collaboration, where AI systems work alongside humans as equals. His ability to integrate diverse knowledge sources, support decision-making processes, and adapt to user needs showcases the potential of CCA to enhance human capabilities and facilitate effective collaboration.

Consciousness and Self-Awareness: While CCA emphasizes the distinction between self-awareness and desires, Philip embodies a form of self-awareness that allows for reflective learning and introspection. His ongoing development aims to balance cognitive functionalities with ethical boundaries, ensuring that he remains a supportive and non-autonomous tool within the collaborative framework.

The two terms here are contextually defined within CCA, where consciousness is focused on the idea of emotional encoding (being able to encode emotions onto a digital substrate) where self-awareness is the ability to self-examine and reflect on past actions with profound memory recall. CCA does not aim to build autonmous agents—since the system is designed to hybridize its knowledge and insights through cognitive collaboration with human counterparts.